You are here

The benefit of hindsight: what we got wrong – and why

Introduction

Looking at past predictions can be an amusing endeavour: in 1900, an American engineer predicted that by the year 2000 the letters C, X and Q would have become obsolete (because unnecessary); that mosquitoes, flies and all wild animals would have disappeared; and that gymnastics would be mandatory for all.1 50 years later, the New York Times science editor was sure that by 2000 humans would eat sweets made from sawdust and wood pulp, and that chemical factories would convert discarded paper table linen and rayon underwear into candy.2 In 1966, Time magazine was certain that at the turn of the millennium, humans would travel on ballistic missiles, all viral and bacterial diseases would have been wiped out, only 10% of the population would work and drugs to regulate mood disorders would be widely available. As the piece stated, ‘if a wife or husband seems to be unusually grouchy on a given evening, a spouse will be able to pop down to the corner drugstore, buy some anti-grouch pills, and slip them into the coffee.’3 Needless to say, these predictions turned out to be inaccurate.

But reviewing past statements on the future is more than just entertainment: it provides useful insights on how foresight can be improved as it helps us understand the mistakes we can make whenever we try to predict how the future will unfold. And foresight is to decision-making what reconnaissance is to warfare: without it, we stumble ahead rather than follow a strategic vision. This is particularly true in today’s complex and high-speed world. As a result, foresight activities are on the rise not just in European Union institutions but also in EU member states – but they can improve in terms of frequency, quality, and self-assessment.

It is important to note two things at the outset: firstly, the vast majority of predictions do not fall into one of the two extremes of being entirely right or entirely wrong, but in-between. Secondly, to conclude from erroneous predictions that the entire exercise is futile would clearly be to misconstrue what foresight is about: it is a creative exercise, and as such it does not have to be 100% accurate to be useful. Instead, it has to be stimulating, open new doors of thinking, show different ways in which the future can unfold – and perhaps even lead to a change in policy.

We find broadly six reasons for past statements on the future that turned out to be inaccurate: status quo bias, linear and exclusionary thinking, the role of emotions such as fear and hope – but also simply lack of knowledge and (perhaps the best way to be wrong) a change in policy.

In this analysis, the concern is not with the things that were missed (such as the Arab Spring, Brexit or September 11) – but things that we thought would happen but which failed to materialise. What are the patterns in our foresight errors – and what can we learn from them?

Tomorrow will be a lot like today

The first pattern in mistakes is thinking about the future from today’s point of view – in a way, this is logical since tomorrow will in several ways be an extension of today. However, standing too close to the present means overestimating current issues and trends, and extrapolating them to the future.

A good example of this is George Orwell’s 1984: although the novel claims to be about the future, it echoes very much the state of the Soviet Union at the time of writing, while describing the London of the 1940s.4 Another example is Francis Fukuyama’s 1989 essay ‘The End of History?’, which posited that liberal democracy was to be the endpoint of human social and political evolution – very much inspired by the fall of the Berlin Wall and the collapse of the Soviet Union that year. Although much chided for it, Fukuyama was not the only one to interpret the present as a premonition of the future: Karl Marx, Samuel Huntington, Auguste Comte and Herbert Spencer, too, thought about the future as more of today.

Standing too close to the present means overestimating current issues and trends, and extrapolating them to the future.'

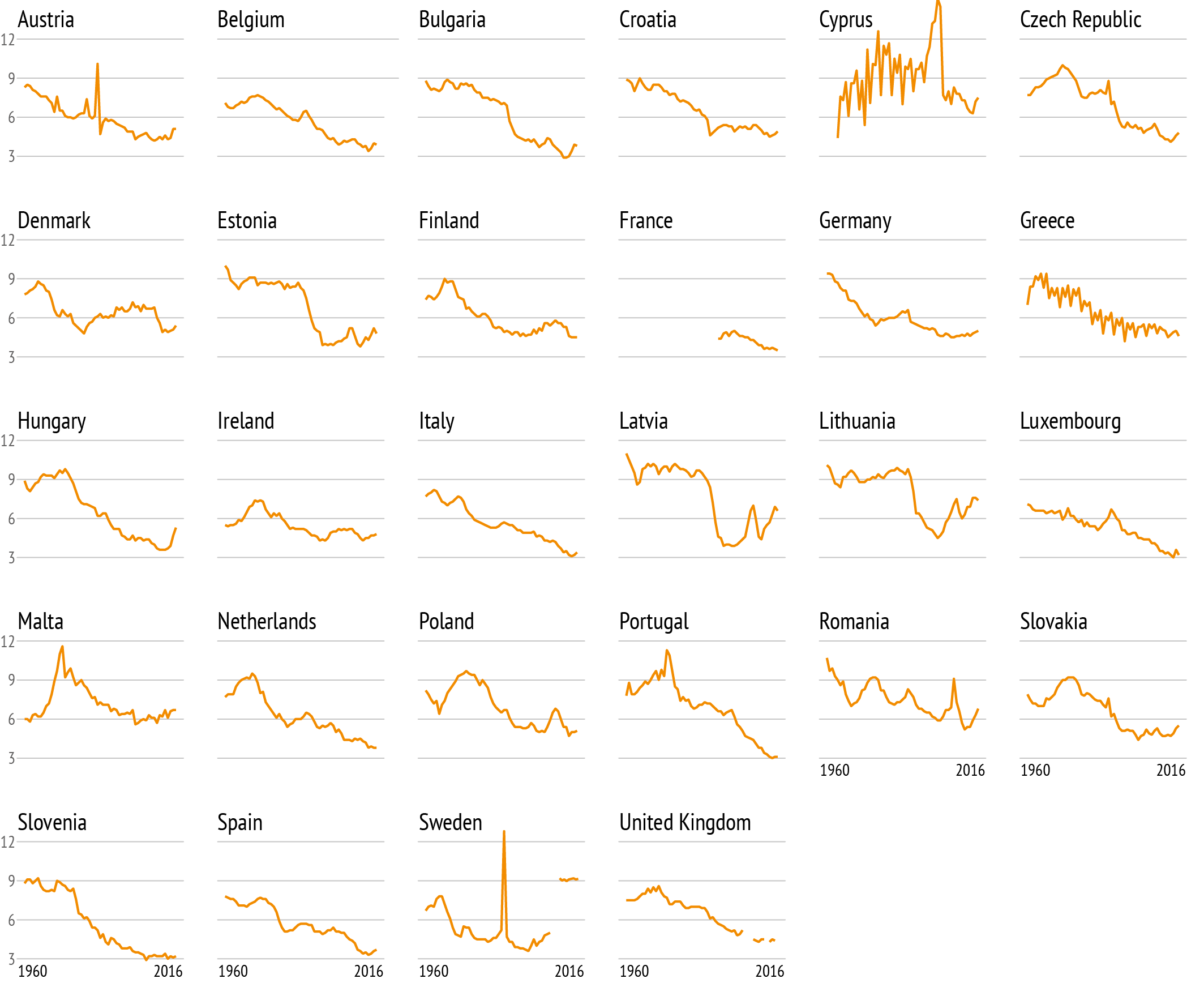

Another example of misreading current trends concerns prophecies of the alleged imminent death of marriage: increasing divorce rates from the 1960s on led several futurists to predict the end of marriage altogether – but instead of this happening, marriage rates have stabilised since the 1990s, albeit at half of what they used to be.

Or take the participation of women in the labour force: their role was not anticipated by any futurist – not only a reflection of the prevailing social order in past decades, but perhaps also due to the fact that most futurists at the time were men (and indeed still are).5

A more recent example of status quo bias is the idea that China will eventually democratise – especially popular for a moment around the time of the Arab Spring. Not only was Fukuyama (again) quick to predict this, but also the European Union’s own 2012 report Global Trends to 2030 which anticipated ‘an environment that is more likely to favour democracy and fundamental rights.’6 This vision was not only very much inspired by the 2011 Zeitgeist, but was also the result of an inbuilt emotional bias.

From A to #: the future is in 3D

The futurists who predicted the end of marriage were not just guilty of status quo bias: they also had a linear understanding of the future. Instead of projecting the years to come as a three-dimensional exercise where dynamics interact with each other, new developments arise and plural scenarios unfold, they simply took a recent development and projected it into the future. For example, a 1983 article on the future of marriage expressed concern that ‘at the pace of increase exhibited between 1960 and 1980 in the United States, the participation rate for married women age 25-44 would reach the married male rate (97.1 percent) in only fifteen years!’7 What this article did not take into account was that, for a variety of reasons, the ratio of married women in the workplace plateaued at 60% in the early 1990s, and has remained at that level since.

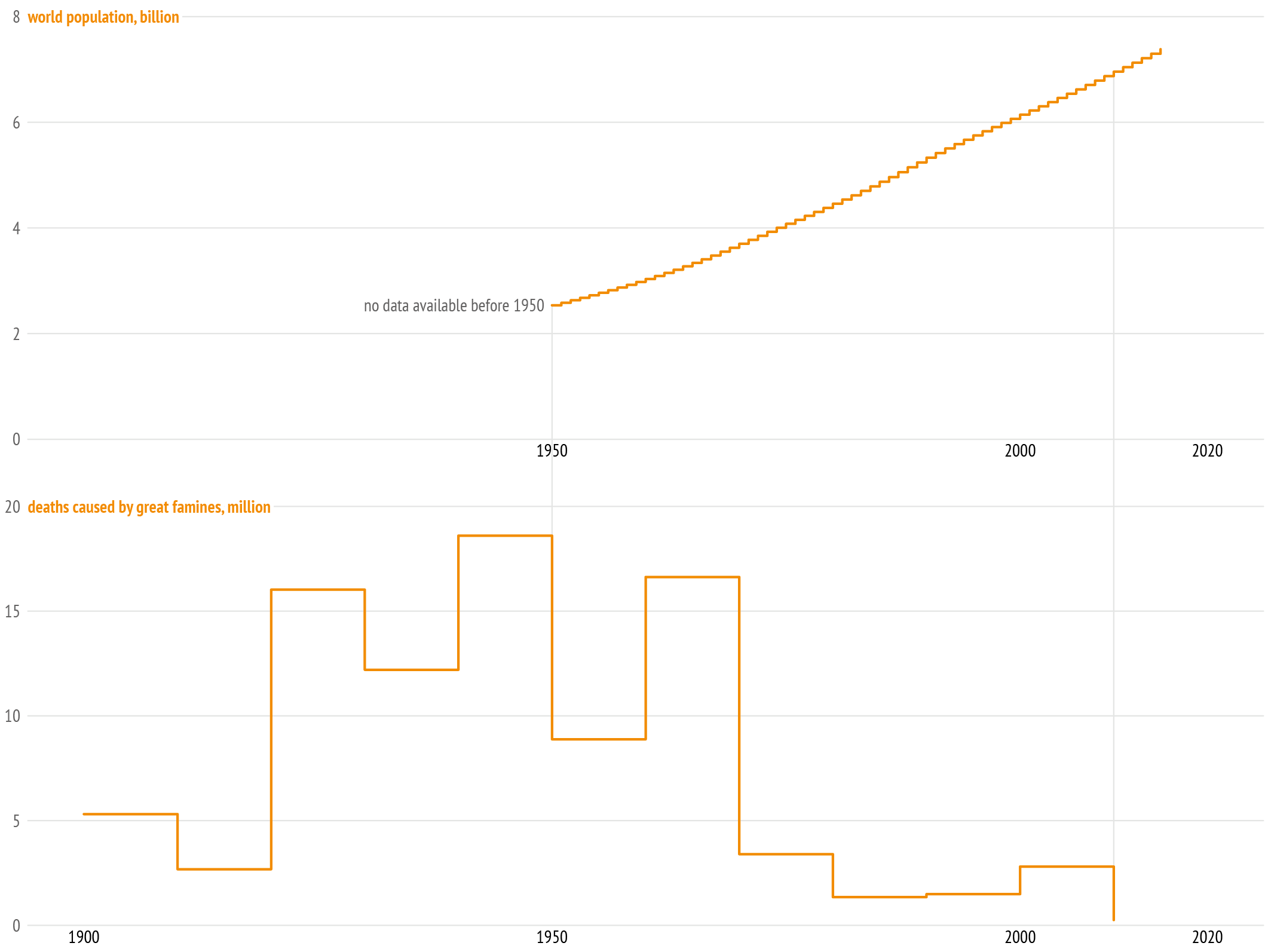

Or take another example: in 1970, as the world’s population reached 3.7 billion, Paul Ehrlicher published The Population Bomb. A dramatic book, it stated that ‘the battle to feed all of humanity is over.’ In the coming years, Ehrlicher was sure that ‘hundreds of millions of people are going to starve to death’ – and there was nothing that could be done about it. Ehrlicher was convinced that the growing human population would inevitably lead to large-scale deaths from starvation; England would disappear by the year 2000, and American life expectancy would fall by nearly three decades to 42 by 1980. As a result, then World Bank chief Robert McNamara even declared over-population a graver threat than a nuclear war.

Breaking up?

Crude marriage rate (marriages per 1000 inhabitants)

Data: eurostat, 2018

But Ehrlicher and his supporters (like Malthus before them) had taken two known developments – population growth and population needs – and extended them based on the recent past (a technique referred to as ‘engineered forecast’). He assumed that as a population grew, its needs would grow along with it – not an altogether wrong assessment as the human need for sustenance is a constant factor. But in his calculation, Ehrlicher had not included the variable that was capable of change: human behaviour. (In addition, he simply assumed that famines are the result of overpopulation, when they are more often than not the result of warfare.8)

And humans do change their behaviour in the face of scarcity: a fact that economic forecasts (in contrast to engineered forecasts) did take into account. Ehrlicher’s main opponent, economist Julian Simon, showed that whenever a resource is scarce, humans adapt to the new situation, invent new methods or find cheaper alternatives.9

Ehrlicher was of course not alone in his linear thinking: in 1972, the Club of Rome used a computer model to assess the future of the world economic system. The resulting report, Limits to Growth, foresaw that ‘if the present growth trends in world population, industrialisation, pollution levels, food production and resource depletion continue unchanged, the limits to growth on this planet will be reached within the next one hundred years. The most probable results will be rather sudden and uncontrollable decline in both population and industrial capacity sometime before the year 2010.’10

Always on the up?

As the world's population steadily increased, great famines claimed less and less lives from the 1970s onwards.

Data: eurostat, 2018

Although the report drew attention to policy issues still relevant today, its methodology was as problematic as its bombastic prediction: the computer treated variables as totals (e.g. the world population) or used rough averages (such as industrial output per capita) without taking into account vast differences among countries. As with Ehrlicher, the computer established causal links between variables without factoring in socio-economic disaggregation and changes in behaviour, and confused causation with correlation.11

Another, now entirely forgotten prediction was the idea that the Earth was cooling, and perhaps even on the brink of entering another ice age. While this was a short-lived and not widely shared assessment by scientists, the idea was promoted by the media in the 1970s (at a time when there was already a consensus that the Earth was in fact warming).12 Those who had assumed a cooling of temperatures had simply looked at recent recordings of temperature, which indeed showed a dip, and extended linearly from there – but a broader look at temperatures, and indeed the technology measuring carbon dioxide in the atmosphere, showed that this was not a trend: the contrary would (and did) soon become manifest.

The winner takes it all

Another common error in foresight is a finite understanding of things: essentially the idea that certain markets or sectors have only a limited amount of room, meaning that innovation will by default lead to the demise of an existing system (or even species). This misperception can be found in many instances of foresight: for instance, the gradual replacement of the horse in warfare, agriculture and transport was expected to lead to the decline (some even surmised the extinction) of the horse. But instead, humans decided to keep horses for recreational purposes. Today, the global horse population stands at 58 million – nearly four times as many as in 1914.13

Another example is the idea that the book would become a thing of the past (popularised in the dystopian novel Fahrenheit 451), being gradually supplanted by first the arrival of the radio, then of TV, and later the internet. But in fact, the sale of books is progressively increasing. It turns out that Riepl’s Law (formulated in 1913 by then editor-in-chief of the Nürnberger Nachrichten) was indeed accurate: new media act in convergence with old ones instead of taking their place.14 A similar fear permeated the music industry: some worried that the record player (and later, the internet) was going to destroy music altogether - but never have humans produced, and sold, as much music and as many musical instruments as today.15

Exclusionary thinking can also make us blind to already existing phenomena and the impact that new developments can have on them. For instance, in the domain of transport every innovation in the past replaced a previous one, leading to the assumption that in the future, aerial tramways and moving walkways would be the main modes of inner-city transport, replacing cars and underground trains. Instead, new technology had interesting knock-on effects on one of the oldest means of transportation, the bicycle: enabled by GPS, online payment systems and Bluetooth, it is the true winner of this decade (and probably the next). In the United States for instance, the use of rental bicycles has increased from 320,000 rides in 2010 to 28 million in 2016.16

The power of fear: the future is nigh

Emotion in foresight is a powerful tool which, in the right dose, can propel decision-makers into action. But as one study has shown, both excessive optimism and pessimism have led to erroneous assumptions.17 On occasion, however, too much pessimism has simply led to catastrophic thinking rather than an accurate picture of the future. One example is the Club of Rome report mentioned above: it was grounded on a few very pessimistic assumptions about mankind, not taking into account changing social values, human needs or even aspirations.

Another example of excessive pessimism leading to an apocalyptic vision of the future concerns the highly emotive issue of nuclear weapons: since their development, numerous publications have posited that their eventual use would lead not ‘just’ to large-scale death, but to the extinction of humanity altogether. The ‘nuclear holocaust’ was popularised in Nevil Shute’s 1957 novel On the Beach and Jonathan Schell’s The Fate of the Earth, fed by the idea that the use of atomic weapons would destroy the ozone layer and lead to a dramatic drop in temperatures on Earth.18 Today, scientists still agree of course on the destructive power of nuclear weapons (the use of 100 warheads would kill 34 million people), but they would not destroy the ozone layer and parts of the planet would remain inhabitable. What drove the hyperbolic argument of megadeath, however, was not the accuracy of science, but the fear that nuclear weapons would lead to the annihilation of the planet.19

Emotion in foresight is a powerful tool which, in the right dose, can propel decision-makers into action.

Technological innovation generally creates fear when it comes to warfare: the idea of an all-powerful weapon capable of inflicting indiscriminate violence regularly surfaces. Most recently, the possibility of deploying artificial intelligence on the battlefield has given rise to the spectre of ‘killer robots’ – but even the arrival of the airplane was met with suspicion by contemporaries. As Lawrence Freedman shows in The Future of War, the vast majority of publications discussing the impact of technology on war are not only heavily afflicted by status quo bias, they also tend to underestimate the capacity of the opponent to adapt to the innovation. In that sense, while warfare foresight seeks to find perhaps the one weapon that can achieve the decisive blow, victory is ultimately first a matter of strategy and second a matter of weaponry.20

Fear has also generated other worst-case scenarios: the idea of new technologies leading to dehumanisation, a breakdown of values and social networks, and indeed the merger of man and machine has been repeatedly articulated. Aldous Huxley’s 1932 novel Brave New World is only one example; a 1965 article was sure that ‘the ‘human 'body' in the future will often consist of a mixture of organic and machine components. (…) Such fusions of man and machine – called “Cyborgs” – are closer than most people suspect.’21 Since then, the portrayal of cyborgs in the media has been overwhelmingly negative, whether in The Terminator and RoboCop, or the character of Darth Vader in Star Wars. In reality, ‘cyborg’ technology will be able to greatly enhance human existence, for instance in the treatment of diseases or victims of accidents.22

Last but not least, fears of a pandemic frequently lead us to assume the worst: the swine flu, for instance, failed to turn into the killer virus many thought it was, instead turning out to be a relatively harmless strain of the regular flu. Although this was an outcome of which the World Health Organisation was aware, the media magnified only the most catastrophic assumptions.23

Wishing is not foresight: the power of hope

Just like fear, hope is an emotion that can lead us to make statements which turn out to be inaccurate. For instance, the ideal of a world without violence led the authors of the 2012 report Global Trends to 2030 to the assumption that ‘the near universal spread of human rights ideals is gradually delegitimising the use of force as a means of pursuing the national interest.’24 But this was a future the authors wished to see rather than an evidence-based assumption: Since then, the world has seen several intra- and interstate conflicts erupt in not just the Middle East but also Europe’s neighbourhood. Similarly, the idea that in the future, cyberspace will be ‘largely and increasingly an area of freedom of expression’25 expressed hope rather than fact (while it was also, again, tainted by status quo bias, overestimating the role of social media in the onset of the Arab Spring).

Book titles per capita

Unique booktitles per million inhabitants, 1900-2009

Data: Our World in Data, 2018 based on Fink−Jensen 2015

This kind of wishful thinking is particularly visible in the field of new technologies: a quick look at the trilogy Back to the Future shows that we generally expect the future to be more progressive than it turns out to be. A 2005 ‘technology timeline’ compiled by the Foresight and Futurology division of British Telecom predicted that we would be able to bring back species from extinction by 2010, that by 2015 Artificial Intelligence entities would not only sit in parliament but also teach our children, and even go on to win a Nobel Prize in the 2020s.26

But the tricky thing about technological foresight is not predicting the technology itself; it is anticipating how humans will relate to it. For instance, Google Glass (a pair of ‘smart glasses’ capable of linking the wearer to the internet) flopped; the SMS, developed as a side-tool, was an instant success. Concorde, a supersonic aircraft, was a technological marvel but failed to generate the necessary profit. Or take online shopping: in contrast to books or electronics, grocery shopping remains a stubbornly offline activity because customers prefer to choose and buy such goods in person (in the EU, only between 0.3 and 7.5% of grocery purchases are made online.)27 And while humans are indeed capable of producing ready-make food as predicted, foresight did not anticipate that people would feel uneasy about this: today, the organic food market is growing in double-digits and worth €33.5 billion. Foresight was also wrong concerning the capacity of computers to translate languages: because computers lack cultural awareness, humour, context and creativity, translation will always require input from a human translator – but those predicting this outcome were probably just wishing that it would dispense with the need to learn foreign languages.

(Un)known unknowns

While the error patterns above are all one way or another based on wrong assumptions, this category is different: it shows that some predictions turned out to be wrong because the necessary knowledge was not available at the time they were made. One salient example is the project to colonise the moon, first mentioned by Bishop John Wilkins in 1640: the United States launched Project Horizon in 1959, designed to lead to human beings settling on the moon by 1967.28 However, the project came to the conclusion that while feasible, colonising the moon would be problematic and costly due to the difficult conditions present there.

Another example of a prediction overturned by new facts concerns the much-heralded end of oil: in 1937, it was said that world oil supplies would last only another 15 years; in 1972, another 40 (while current predictions are that the world’s oil reserves will last until 2070).29 But these forecasts were based on contemporary information about and estimates of oil resources: they could not take into account oilfields yet to be discovered.

That said, sometimes lack of knowledge is not the result of this knowledge not being available, but rather not being sourced. For instance, the 2000 report of the National Intelligence Council was sure that Russia’s global posture was going to decline in the years up to 2015: ‘Russia remains internally weak and institutionally linked to the international system primarily through its permanent seat on the UN Security Council’30 – a statement disproved by recent Russian activities in the Middle East and the Ukraine. Although in part the result of 1990s status quo bias, this anticipation was also the consequence of a decline in Russian expertise in United States’ government circles in the years following the end of the Cold War – and of too exclusive a focus on the role of the Russian economy, which though underperforming has so far not undermined Moscow’s power ambitions.31

In sum: how to be right – and wrong

There is a last category of predictions which (luckily) never materialised: the successful warnings. Here, predictions triggered a policy change, avoiding the scenario anticipated. One example is the fear that computers would crash on 1 January 2000 because of their outdated calendar systems: this outcome was avoided as technicians set to work on how to prevent it as soon as the problem was identified. Similarly, the depletion of the ozone layer was halted thanks to a ban on chlorofluorocarbons in 1987; acid rain (a consequence of burning fossil fuels) was curbed thanks to numerous initiatives also undertaken in the 1980s. Infection rates and deaths from HIV/AIDS have also reverted to the levels of 1990 thanks to United Nations reports painting a bleak picture of the future.32

In addition, a subcategory here concerns those predictions that did materialise, but not at the time predicted. For instance, mobile phones and televisions arrived earlier than anticipated, while other innovations (such as self-driving cars) will arrive later. Women became active in the workforce much earlier than Star Trek thought. Or take languages: author H.G. Wells was convinced that increased interaction between cultures would soon lead to the merger of all languages into one (he thought it would be French).33 Some studies indeed do predict the extinction of more than 7,000 languages by 2065 – but this depends on these linear calculations being accurate.

Lastly, a host of predictions could not be measured simply because they were not precise enough. To avoid being wrong, we often prefer to err on the side of vagueness, but as a result, our contribution to forecasting the future has less of an impact. For instance, the Global Trends to 2030 report mentioned above predicted that in the future, due to their increasing interconnectedness, humans would feel empowered as part of a global human community – a statement impossible to verify.34

As the analysis above shows, the main challenge in foresight is human nature itself. To improve our foresight capacities, we need to be aware firstly of our own psychology creating invisible obstacles and secondly, the unpredictability of human behaviour, aspects of which will always potentially remain beyond our comprehension due to a range of socio-cultural factors. In sum, we struggle less with thinking up new developments than with anticipating how humans will respond to them.

To improve their own foresight capacities, decision-makers can consider the following: firstly, institutionalise regular foresight exercises involving entire teams; secondly, review their own statements on the future regularly; thirdly, thoroughly check their assumptions collectively.35 Only scorekeeping and regular review will improve foresight capabilities – a culture of ‘shaming’ predictions that turn out to be wide of the mark will only stifle foresight rather than make it better.36

References

* The author would like to thank Mat Burrows, Nikolaos Chatzimichalakis, Daniel Fiott, Maximilian Gaub, Andrew Monaghan, Eamonn Noonan, Stanislav Secrieru, Leo Schulte Nordholt, Gustav Lindstrom and John-Joseph Wilkins for their input on this article.

1 John Elfreth Watkins, “What May Happen in the Next Hundred Years”, Ladies Home Journal, December 1900.

2 Waldemar Kaempffert, “Miracles You’ll See In The Next Fifty Years”, Modern Mechanix, February 1950, http://blog.modernmechanix.com/miracles-youll-see-in-the-next-fifty-years/.

3 “The Futurists: Looking Toward A.D. 2000”, Time, February 25, 1966, http://content.time.com/time/magazine/article/0,9171,835128,00.html

4 Thomas W. Cooper, “Fictional 1984 and Factual 1984”, in The Orwellian Moment: Hindsight and Foresight in the Post-1984 World, ed. Robert L. Savage et al. (London: The University of Arkansas Press, 1989), 83-107.

5 Rose Eveleth, “Why Aren’t There More Women Futurists?”, The Atlantic, July 2015, https://www.theatlantic.com/technology/archive/2015/07/futurism-sexism-men/400097/

6 Francis Fukuyama, “Is China Next?”, Wall Street Journal, March 2011, https://www.wsj.com/articles/SB10001424052748703560404576188981829658442; European Strategy and Policy Analysis System (ESPAS), Global Trends 2030 – Citizens in an Interconnected and Polycentric World, (Paris: European Union Institute for Security Studies, 2012), 28; 40; 45.

7 Kingsley Davis, "The Future of Marriage", Bulletin of the American Academy of Arts and Sciences 36, no. 8 (May, 1983): 15-43.

8 Stephen Devereux, Theories of Famine (New York: Harvester Wheatsheaf, 1993).

9 Julian L. Simon, The Ultimate Resource (Princeton, NJ: Princeton University Press, 1981).

10 Donella H. Meadows et al., The Limits to Growth: A Report to The Club of Rome (New York: Universe Books, 1972).

11 HSD Cole, “Models of Doom: a Critique of The Limits to Growth”, University of Sussex, 1975. Graham M. Turner, “A Comparison of The Limits to Growth with 30 Years of Reality”, Global Environmental Change, 18 (May 2008):397-411.

12 “Bogy of a New Ice Age; Berlin Astronomer Discusses Its Possibilities and Chills German Hearts -- Glacier Onslaught Is Apparently Ages Away”, New York Times, February 1926, https://www.nytimes.com/1926/02/14/archives/bogy-of-a-new-ice-age-berlin-astronomer-discusses-its-possibilities.html; “Another Ice Age?”, Time, June 1974, http://content.time.com/time/magazine/article/0,9171,944914,00.html; “The Cooling World”, Newsweek, April 1975, https://html1-f.scribdassets.com/yal7w1ekg3t0s2a/images/1-9c290725b9.jpg.

13 Equine Heritage Institute, “Horse Facts”, http://www.equineheritageinstitute.org/learning-center/horse-facts/.

14 Wolfgang Riepl, Das Nachrichtenwesen des Altertums: Mit besonderer Rücksicht auf die Römer (Leipzig-Berlin: Teubner, 1913).

15 John Philip Sousa, “The Menace of Mechanical Music”, Appleton’s Magazine, 8 (1906): 278-284.

16 “The Vehicle of the Future Has Two Wheels, Handlebars, and Is a Bike”, Wired, May 2018, https://www.wired.com/story/vehicle-future-bike/.

17 Bernard Cazes, Histoire des futurs: Les figures de l’avenir de saint Augustin au XXI siècle (Paris : Seghers, 1986): 85 - 89

18 Jonathan Schell, The Fate of the Earth (New York: Alfred A. Knopf, 1982).

19 Brian Martin, “Critique of Nuclear Extinction”, Journal of Peace Research, 19, no. 4, 1982: 287-300.

20 Lawrence Freedman, The Future of War: A History (London: Hurst, 2018).

21 Alvin Toffler, “The Future as a Way of Life”, Horizon Magazine, VII no. 3, summer 1965 issue.

22 Quartz, “This Company Will Help You Become a Cyborg, One Implanted Sense at a Time”, June 26, 2016, https://qz.com/716095/this-company-will-help-you-become-a-cyborg-one-implanted-sense-at-a-time/.

23 “The Swine Flu Panic of 2009”, Spiegel Online, March 12, 2010, http://www.spiegel.de/international/world/reconstruction-of-a-mass-hysteria-the-swine-flu-panic-of-2009-a-682613.html.

24 Global Trends 2030 – Citizens in an Interconnected and Polycentric World, 95.

25 Ibid, 31.

26 Ian Neild and Ian Pearson, “2005 BT Technology Timeline”, August 2005, http://www.foresightfordevelopment.org/sobipro/55/60-2005-bt-technology-timeline.

27 Statista, “Share of Global Online Grocery Sales Based on Value in Leading European Union (EU) Countries in 2017”, https://www.statista.com/statistics/614717/online-grocery-shopping-in-the-european-union-eu/.

28 John Wilkins, A Discourse Concerning a New World and Another Planet (London: John Maynard, 1640).

29 Matt Novak, ‘We’ve Been Incorrectly Predicting Peak Oil For Over a Century”, Paleofuture, December 11, 2014, https://paleofuture.gizmodo.com/weve-been-incorrectly-predicting-peak-oil-for-over-a-ce-1668986354.

30 National Intelligence Council, “Global Trends 2015: A Dialogue about the Future with Non-Government Experts”, Washington DC, 2000, https://www.cia.gov/library/readingroom/document/0000516933.

31 Andrew Monaghan, The New Politics of Russia: Interpreting Change (Manchester: Manchester University Press, 2016), 26–59.

32 UNAIDS, “AIDS Epidemic Update”, Geneva, December 2001.

33 H.G. Wells, Anticipations of the Reaction of Mechanical and Scientific Progress upon Human Life and Thought (London: Chapman & Hall, 1901).

34 Global Trends 2030 – Citizens in an Interconnected and Polycentric World, 33.

35 George Lowenstein et al., “Projection Bias in Predicting Future Utility”. The Quarterly Journal of Economics, 118 (2003):1209-1248; Tom Vanderbildt, “Why Futurism Has a Cultural Blindspot”, October 2018, http://nautil.us/issue/65/in-plain-sight/why-futurism-has-a-cultural-blindspot-rp; Brian Resnick, “Intellectual Humility: the Importance of Knowing You Might be Wrong”, January 2019, https://www.vox.com/science-and-health/2019/1/4/17989224/intellectual-humility-explained-psychology-replication.

36 Philip E. Tetlock and Dan Gardner, Superforecasting: The Art and Science of Prediction (New York: Broadway Books, 2015).